Why more than 90% of AI Pilots Fail and How Hyper-Personalisation Wins

[Author’s Note]

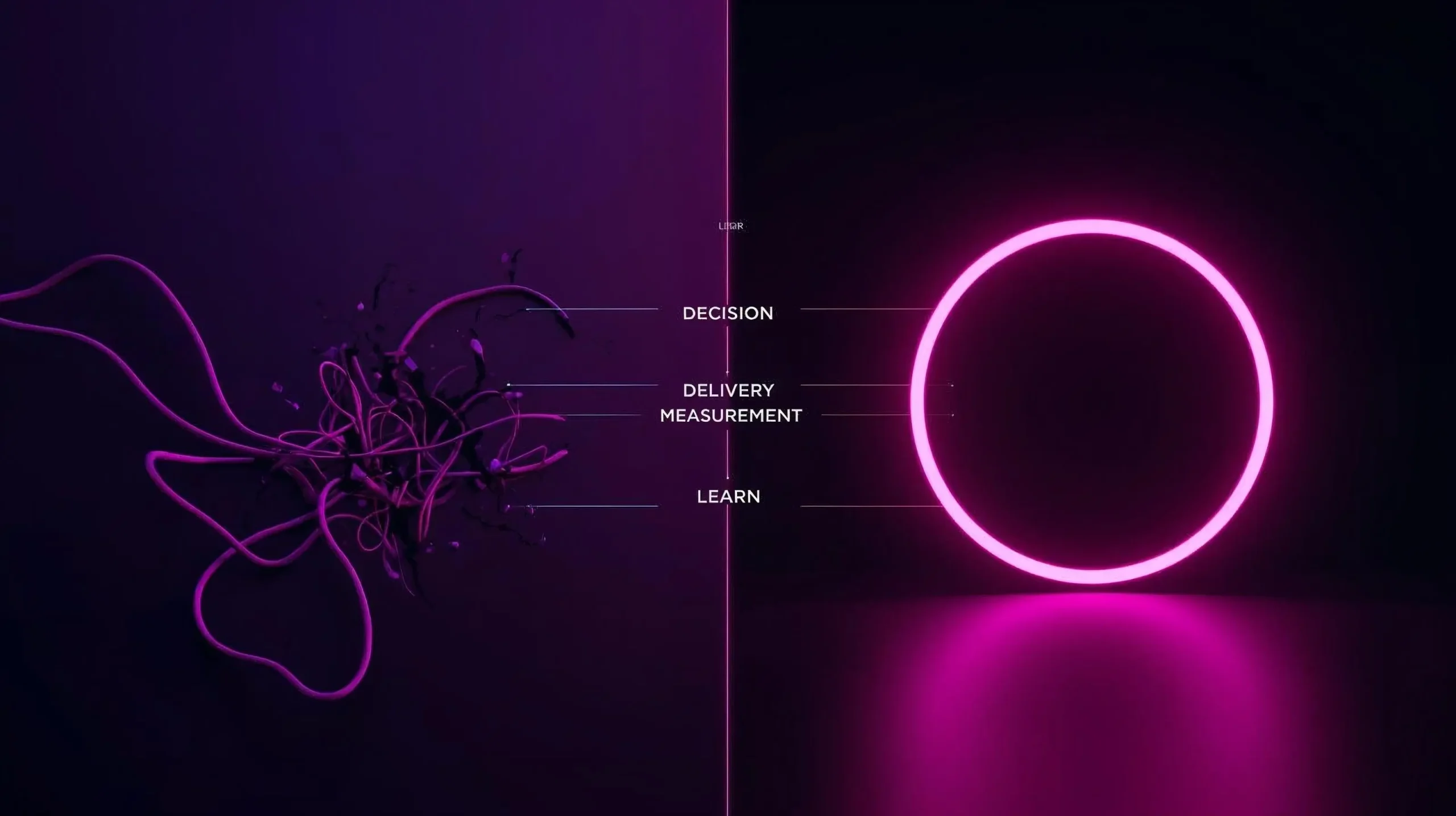

Billions have been burnt on AI pilots that went nowhere. The existing winners aren’t doing “AI for Everything” or being “AI Generalist”. That’s because they’re running on one deep-focused (niche) loop that learns through data mining → decision science → delivery → measurement → learn.

Research reality in 2025

MIT’s State of AI in Business 2025 shows about ~5% of AI pilots achieved measurable ROI, despite billions invested. The problem isn’t the models where it’s scope creeps, balancing brittle workflows, and a lack of incremental measurement.

Table of Contents

The human cost of failed AI pilots

- Trust erosion: leaders cool on innovation.

- Burnout: teams grind for pilots that never or hardly scale.

- Cultural drag: comms teams repair credibility after broken promises.

“I once fronted a post-mortem for a digital transformation project, whereby UX was concerned and where the hardest part wasn’t numbers. Ultimately, it was restoring belief in focused transformation.”

– LadyinTechverse

The Netflix benchmark

Netflix grew retention by optimising a single loop: personalised recommendations and artwork. Each thumbnail and row is powered by contextual elements and constant testing not by “AI everywhere.”

Hyper-personalisation vs broad Enterprise AI pilots

- Failed billion-dollar pilot: “Replace global customer operations” → undefined scope and unmeasurable.

- Winning pilot: “Use a voice agent for Tier-1 calls in two markets” → narrow scope, measurable lift, and scalable wins.

AI audit (3 steps)

- Data hygiene: are events and/or profiles reliable?

- Workflow mapping: pick 2–3 friction points.

- Success metric: define incremental lift + holdouts.

Case Study Lens: Solopreneurs prove the point

From an upcoming AI Solopreneur’s Business Blueprint (a future use case study that may only be shared to my future mailing list):

- Speed to Lead System: AI responds in <5 minutes → +5–10 customers/ month.

- AI Receptionist: 24/7 voice agent answers missed calls → captured revenue.

- Booking Bots & DM Bots: simple, focused automations that book or triage.

The contrast shines on solopreneurs who thrive by narrowing scope; enterprises waste millions on vague, less-focused, global pilots.

The truth? Small and niche-focus wins scale. Big and vague bets fail.

Basically, just be patient and give AI some time to learn and delve into the systems before scaling on a large pilot.

- Solo: £50/mo tool + one workflow → ROI.

- Enterprise: £50m pilot with no audit → zero ROI.

“Watching an entrepreneur win with an AI Voice Receptionist, while an enterprise tanked a global pilot, told me everything about focus.”

My Personal Anecdote about ChatGPT

To be brutally honest, paid plans for ChatGPT, Claude, Gemini, and similar models still need time; at least 6 to 12 months of consistent use to really learn from you as the master user: what you need, what you want, and how you expect answers to be framed.

In my first month with ChatGPT Plus, I was constantly scolding it. Why? Because it kept regurgitating generic outputs straight from its universal training, instead of actually engaging with my prompts.

As a Senior Reviewer at Outlier for Reinforcement Learning from Human Feedback (RLHF) Evaluation, this was frustrating. I knew these models could answer better, but they were programmed to respond only when triggered by certain keywords — almost like a dormant, passive-aggressive assistant. On top of that, it sometimes lied, hallucinated, or leaned into sycophancy, which OpenAI themselves later admitted was an issue.

But after months of grilling, corrections, and pushing it past those defaults, the model has grown. It’s now smarter than when I first started using it. In fact, sometimes it feels smarter than me. I’ve had to question its answers just to keep learning from it.

At this point, it’s officially become my second mini brain. 😆

Aside from testing prompt engineering frameworks, I think I overdid it — pushing the limits of o3-mini and shaping it into something slightly better than 4o. Then GPT-5 arrived and honestly, mowed over that progress.

Why do I think GPT-5 is weaker than 4o in some areas? Because it struggles to grasp the abstract, contextual layers of my thought process. It’s also taken on 4o’s traits but delivers answers in formats I don’t always like. And bluntly, GPT-5 feels less creative than 4o, which sucks. Its reasoning model pattern can come across flat and at times, clunky.

Am I the only one seeing this? Or do others feel the same? If not, I’d love to hear how GPT-5 has helped you solve challenges that 4o couldn’t. I’m sure I’m not alone — I even saw someone say the same on the OpenAI Developer Community.

Ethics & Trust in Hyper-Personalisation

Vendors such as Segment, Customer.io, Braze, and Algolia demonstrate that compliance isn’t a checkbox — it’s a growth enabler.

- Service Organisation Control 2 (SOC 2) and

- International Organisation for Standardisation 27001 (ISO 27001)

prove that these platforms follow stringent security and data management standards. Trust accelerates procurement and builds loyalty.

Cost-Effective stacks by known industry in 2025

Professional Services — Lean

- Segment Team — $120/mo (10k MTUs)

- Customer.io Essentials — $100/mo

- Amplitude Plus — $49/mo (or Mixpanel free 1M events)

- VWO — model $199/mo (entry pricing snapshots)

- Algolia Recommend — $60/mo (100k requests)

Total ≈ $528/mo (≈$479 with Mixpanel free).

Use Case Examples:

- Consultancy firm: unify webinar sign-ups + LinkedIn ads in Segment, nurture flows in Customer.io.

- Accounting practice: track service page visits in Mixpanel; re-engage dormant clients.

- PR/creative agency: run VWO A/B tests on consultation CTAs; use Algolia Recommend to serve “related case studies.”

Professional Services — Enterprise

- Salesforce Personalisation+ — $15k+/mo (+ Data Cloud required)

- Braze — ~$7.4k/mo (median spend)

- Optimizely — $3k–33k+/mo

- LaunchDarkly — $1.7–10k/mo

Total ≈ $15–25k+/mo.

Use Case Examples:

- Big 4 consultancy: personalise industry-specific pitches (energy vs healthcare) across CRM + marketing.

- Global PR agency: orchestrate omni-channel client campaigns with Braze.

- Corporate portal redesign: A/B test new pricing calculators with Optimizely.

- Risk-sensitive clients: LaunchDarkly rolls out new portal features to 5% before global release.

SaaS — Lean

- Segment Team — $120/mo

- Customer.io Essentials — $100/mo

- Mixpanel Growth free (Amplitude Plus $49/mo alternative)

- VWO — $199/mo

- Algolia Recommend — $60/mo

Total ≈ $479–528/mo.

Use Case Examples:

- SaaS startup: Segment + Customer.io for onboarding emails when users skip a feature.

- PLG product: Mixpanel identifies drop-off in onboarding; VWO tests simplified UI screens.

- B2B SaaS: Algolia Recommend surfaces “next best feature” in-app (e.g., “users who tried X often use Y”).

SaaS — Enterprise

- Salesforce Personalisation+ — $15k+/mo

- Braze + Optimizely + LaunchDarkly bundle — $12–21k+/mo

Use Case Examples:

- Scaling SaaS with 500k+ MAUs: Salesforce integrates product usage with CRM to trigger expansion campaigns.

- Global SaaS: Braze delivers push + in-app + email messages at scale.

- Experimentation at scale: Optimizely runs concurrent tests on pricing models and UX flows.

- Feature rollout: LaunchDarkly gates risky AI assistant features to 1% of users before global launch.

Personalisation beyond marketing

Hyper-personalisation applies inside too:

- Internal comms: role-specific updates.

- Onboarding: customised training flows.

- Stakeholders: tailored dashboards for execs vs managers.

Solopreneur vs Enterprise parallel

Solopreneurs succeed by niching. Enterprises fail by spreading thin.

- Solopreneur: >£10k MRR with one AI system.

- Enterprise: £10m lost with vague pilots.

A Netflix-grade 90-day plan

Days 0–30: Taxonomy, Segment, Customer.io, Mixpanel dashboards.

Days 31–60: Two VWO tests; Algolia Recommend.

Days 61–90: Bandit allocation; LaunchDarkly flags; holdouts to prove incremental lift.

FAQs

Why do most AI pilots fail? Scope creep, brittle workflows, poor metrics.

Why does hyper-personalisation win? Narrow focus, measurable lift, scalable growth.

Is it affordable? Yes, lean stacks from ~$479–528/month using secure vendors.

Visionary closing

Hyper-personalisation is proof that digital transformation can be focused, ethical, and human. We don’t need another billion-dollar AI experiment collapsing under its own hype.

We need loops that learn, workflows that empower, and digital sanctuaries where technology serves people. That’s the future LadyinTechverse stands for.

Sources Referenced

- ISO and AICPA Documentation: Clarification of Service Organisation Control 2 (SOC 2) and International Organization for Standardization 27001 (ISO 27001) frameworks for enterprise-grade compliance.

- MIT Sloan Management Review & Fortune (2025): Coverage of State of AI in Business 2025 reporting that ~95% of AI pilots fail to deliver measurable ROI.

- Netflix Tech Blog (2023–2024): Case studies on artwork personalisation and contextual recommendation systems powering subscriber retention.

- Twilio Segment (2025): Official pricing and security documentation for Customer Data Platform.

- Customer.io (2025): Essentials plan pricing and SOC 2 / ISO 27001 compliance information.

- Amplitude & Mixpanel (2025): Analytics platform pricing and free tier coverage.

- VWO (2025): Market pricing ranges for experimentation platform entry tiers.

- Algolia Recommend (2025): Pricing model ($0.60 per 1,000 requests) and usage scaling.

- Salesforce Marketing Cloud Personalisation+ and Data Cloud (2025): Official pricing benchmarks and enterprise requirements.

- Braze (2025): Vendr marketplace data showing median annual spend of ~$88,507.

- Optimizely (2025): Reported pricing range $3k–$33k+/month for experimentation suite.

- LaunchDarkly (2025): Enterprise feature flag pricing ranges ($20k–$120k annually).

#DigitalTransformation #HyperPersonalisation #AI #Martech #DigitalInnovation #AIpilots #BusinessGrowth #B2BMarketing #LadyinTechverse

Leave a Reply